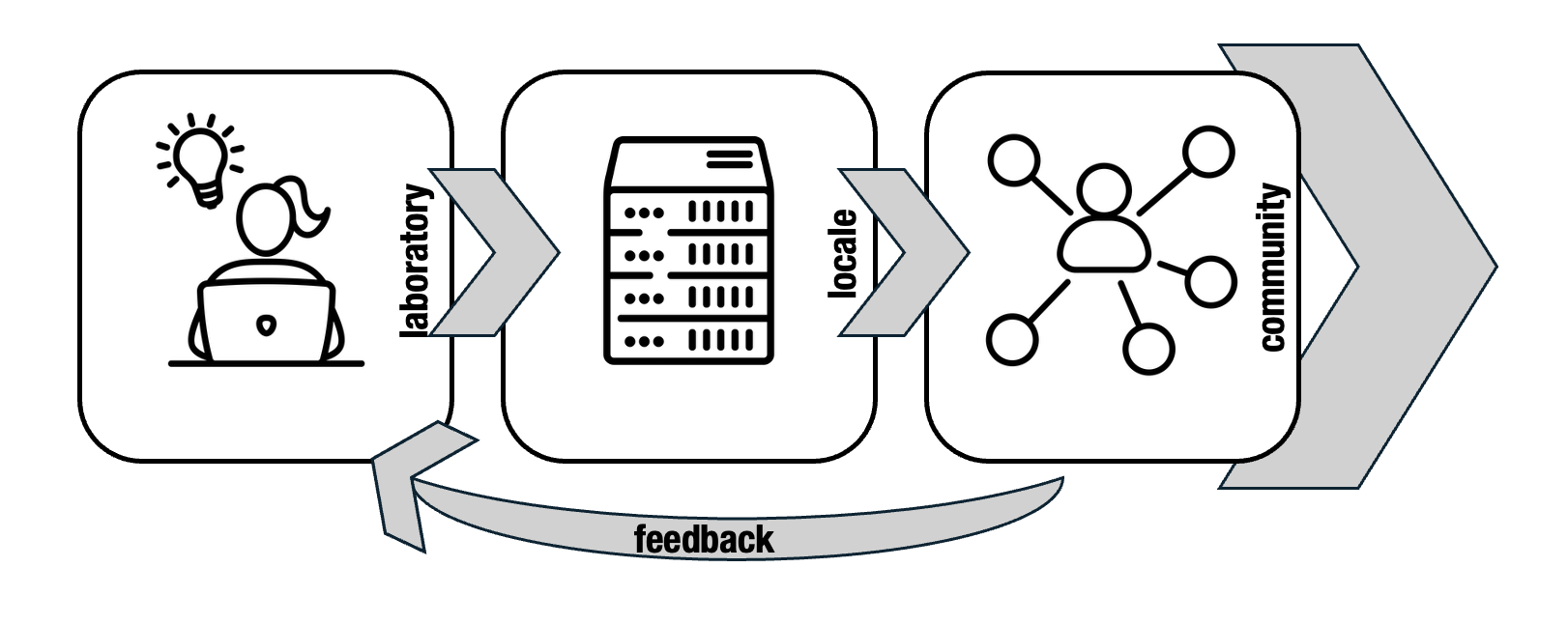

Our Approach

CHTC is a research center at UW - Madison and home to campus computing services. Housed across the Computer Science Department and Morgridge Institute for Research, we are practitioners of translational computer science. CHTC develops technologies to advance high throughput computing, and then deploys those technologies and provides computing capacity to a broad community of researchers, with the goal of continuously responding to researcher feedback and enabling them to advance their work. Our leadership in the Partnership to Advance Throughput Computing (PATh) Project represents this commitment to developing tools and communities around them.

As a Lab

CHTC is a laboratory for the development of high throughput computing tools and methodologies, including software products like the HTCondor Software Suite and the Pelican Platform, and championing the practice of Research Computing Facilitation.

As a Service Provider

As part of our translational computer science mission, CHTC operates computing and facilitation services available to UW - Madison affiliates at no cost. Any researcher at UW - Madison, who has outgrown their current computing capacity (whether that be a laptop, desktop, server, or small cluster) can use CHTC’s computing capacity to scale up their research. While our speciality is in HTC, we can support a wide variety of computing workloads - come talk to us or request an account!

CHTC also supports the operation of national computing infrastructure like the Open Science Pool and Open Science Data Federation.

As a Center

We bring our development activities and our services together under one roof in order to facilitate the exchange of information between the users and developers of our technologies. Without feedback on what researchers need to take their work to the next level (or just make computing more accessible), our research won’t move forward. Our door (or email) is always open to hear about pain points and suggestions for improvement.